AI in STEM: Inspiring Classroom Projects

Overview

General project information and requirements

Subjects: Sciences, ICT, interdisciplinary projects

Other disciplines: Coding, arts

Age groups: Primary, lower secondary, upper secondary

Time frame: Project week or weekly class/extracurricular activity for one half-term period

Required materials/hardware/software/online services and tools:

- Computers or tablets with internet connection / Arduino Uno or Raspberry Pi

- Webcam

- Teachable Machine by Google or GenAI (GDPR compliant)

- Coding: block-based programming platforms (Playground raise, Scratch, PictoBlox) for younger students; Python for older students

A video tutorial on how to prepare, train, and test a machine learning model with two classes of images in PictoBlox can be found on our YouTube channel.

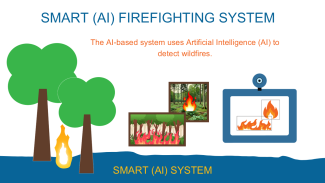

AI Firewatchers: Detecting and preventing wildfires

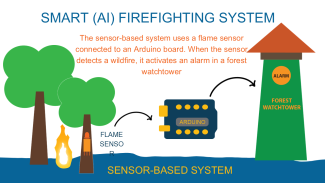

Wildfires are a growing environmental problem, intensified by climate change. They destroy ecosystems and contribute to global warming. The project proposes two complementary solutions for wildfires detection and control: The first solution uses a flame sensor to detect fire, triggering alarms at the forest watchtower. The second, more innovative solution, uses artificial intelligence and machine learning to identify fire patterns in forest images. When detecting a wildfire, the system automatically activates a water tank mechanism and sends a notification to a smartphone via Cloud Services (Adafruit IO and IFTTT).

View the project poster and the project video by the team from Agrupamento de Escolas de Esmoriz - Ovar Norte, Portugal

© Andreas Mnich

Age group: 14–16 years

Required materials:

- Cardboard for the physical model (watchtower, tree)

- Hardware: Arduino Uno, flame sensor, relay, water tank with mini water pump, LED, buzzer

- Software: Pictoblox programming environment, IoT cloud platforms (Adafruit IO and IFTTT)

The project integrates two complementary solutions for detecting and combating wildfires, which complement each other for an effective response. The solutions combine both hardware and software, merging physical sensors and artificial intelligence to optimize response time and accuracy.

- The sensor-based system utilizes a flame sensor connected to an Arduino Uno board to detect infrared radiation emitted by flames. When fire is detected, the system immediately triggers a sound alarm and lights up an LED at a forest watchtower, alerting local response teams.

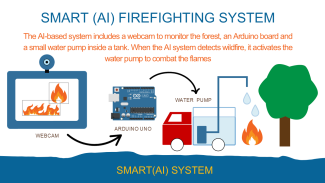

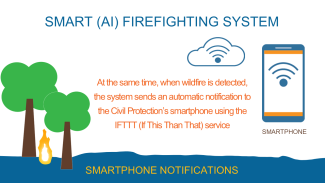

- The AI-based smart solution employs AI to analyze images for fire patterns. Developed using the Pictoblox platform, the AI model is trained to recognize flames and distinguish between small and large-scale fires. This approach ensures precise detection and allows for real-time monitoring of fire progression. When the AI detects a fire, it sends a signal to the Arduino Uno, activating a relay that triggers a water pump to extinguish the fire. This automated response minimizes reaction time and enables immediate intervention without human assistance. Additionally, the system includes a remote monitoring mechanism via Adafruit IO and IFTTT. Upon fire detection, a wireless signal is transmitted to the IFTTT platform, which sends an automatic notification to the coordination team's smartphones, ensuring rapid communication.

Project phases

- In the initial phase, students conduct research on environmental sustainability, particularly on wildfires, their causes, and environmental impacts. They also explore key concepts related to Arduino, sensors, artificial intelligence, machine learning, and the Internet of Things (IoT), acquiring the necessary knowledge to develop the system.

- During the second phase, students create scenarios representing elements of the forest environment, such as trees and a watchtower. They design and build these elements using cardboard, integrating them into the test scenario. This stage helps them develop skills in digital design and manual prototyping.

- In the development phase, students assemble the electronic components (flame sensor, LED, relay, and mini water pump) connecting them to an Arduino Uno board. They programme the two systems - sensor-based and AI-based - using the Pictoblox programming environment. For the AI-based system, they utilise Pictoblox's Machine Learning tools to create and test models for fire detection in images. Additionally, students implement connectivity with IoT platforms (Adafruit IO and IFTTT), enabling remote system monitoring.

- During testing and optimisation, they fine-tune sensor sensitivity parameters and evaluate the AI’s accuracy in detecting fires. They also test the effectiveness of the automated fire suppression system, ensuring proper activation of the water pump in different scenarios.

- Finally, in the presentation and awareness phase, students document the process, create explanatory materials, and share the project with the school community and local entities.

The AI-driven component relies on a machine learning model trained to identify wildfires based on image analysis. The process consists of the following phases:

- Data collection: Representative images of various fire types are gathered, including small-scale flames and large-scale wildfires.

- Data classification: Images are categorized based on fire intensity and characteristics to enhance AI accuracy.

- Model training:The model is trained using labeled datasets to recognize different types of fires effectively.

- Model testing: The trained model undergoes validation to assess its precision in identifying and classifying wildfires.

Arduino programming and image recognition:

Following AI training, the Arduino Uno board is programmed using Pictoblox’s Machine Learning extensions. A webcam is integrated for real-time image recognition, classifying fires based on predefined categories.

Fire intensity-based response:

Depending on fire severity, the Arduino board executes a programmed action, activating a water pump to extinguish the flames. The water volume dispensed is determined by the intensity of the detected fire.

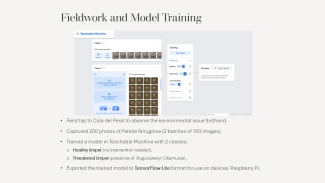

Saving Patella Ferruginea: Protecting the local habitat with the help of machine learning

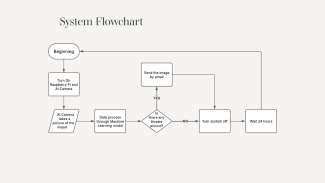

This project, developed in Algeciras (Strait of Gibraltar), aims to protect the endangered limpet Patella ferruginea from the invasive seaweed Rugulopteryx okamurae using AI-based monitoring. The team designed an autonomous monitoring system that activates every 24 hours, and a camera captures images of the area where the limpet lives. These images are processed on a Raspberry Pi to assess environmental conditions. The picture is run through a machine learning model trained in Teachable Machine, then identifies the limpet and assesses potential threats from the seaweed. If a risk is detected, an automated email attaching the image is sent for conservation monitoring.

The project highlights the potential of AI and IoT in marine conservation, providing an innovative and energy-efficient method for monitoring endangered species. By combining technology and environmental awareness, students actively contribute to the development of a system that could help protect the local habitat.

View the project poster and the project video by the team from Algeciras, Spain.

© Andreas Mnich

Age group: 14–16 years

Required materials:

The Patella ferruginea limpet is one of the most endangered marine species in the Mediterranean, facing increasing threats due to the invasion of Rugulopteryx okamurae in the Strait of Gibraltar Natural Park. This invasive seaweed is altering marine ecosystems and endangering the limpet’s habitat. To address this issue, this project focuses on developing an autonomous monitoring system that leverages AI and IoT technologies to detect and assess the risk posed by the seaweed.

A Raspberry Pi, powered by a solar panel and battery, has been deployed in a coastal area where the limpet is found. Equipped with a camera, the system captures images periodically. These images are processed using a Machine Learning model trained in Teachable Machine, which identifies the presence of Patella Ferruginea and determines whether the invasive seaweed has encroached upon its habitat. The system operates in a 24-hour cycle to ensure continuous monitoring while optimizing energy consumption. At scheduled intervals, the Raspberry Pi and camera power on to capture an image of the habitat. The AI model analyzes the image to detect the presence of both the seaweed. If the system determines that the seaweed is invading the habitat, it sends an email alert with the captured image. If no threat is detected, the system shuts down to conserve energy and remains off for 24 hours before restarting the cycle.

Project phases

To develop a reliable AI model, a diverse dataset of images representing both healthy ("Limpet OK") and affected ("Limpet NOT OK") limpets was needed. As part of the project, students participated in a field trip to a coastal area, where they personally captured images of limpets in their natural environment. This hands-on experience allowed them to understand the importance of data collection in AI projects while also learning about marine ecosystems.

The collected images were then used to train the model in Teachable Machine, a user-friendly tool by Google. For training, the model was configured with 500 epochs, a batch size of 16, and a learning rate of 0.001 to ensure effective learning. The AI analyzed the images to recognize patterns distinguishing between healthy and affected limpets. After training, the model was tested with new images, successfully classifying limpets with high accuracy based on the characteristics it had learned. The results demonstrated a clear distinction between the two categories, confirming the reliability of the system in identifying threats to the species. The trained model was then exported in the TensorFlow Lite format for use on the Raspberry Pi.

Students also helped assemble and program the monitoring system. They tested the system in different conditions, refining the AI model based on new data. A custom enclosure to house all components was designed in Tinkercad and 3D-printed for assembly and outdoor protection.

In Search of Lost Time: Helping cognitively impaired people in navigating their living space

This project uses AI to help people with memory disorders recognise home environments and navigate their living space. Fifth-grade students explored the concept of memory starting from Proust's A la recherche du temps perdu (In Search of Lost Time), then studied Alzheimer’s challenges with experts. They trained a machine learning model to recognise rooms and typical objects, creating an assistant in Scratch 3.0 that, when shown an object, suggests the appropriate room and the activities that can be performed there.

Through an interdisciplinary approach integrating literature, neuroscience, and programming, children developed a practical AI assistant to support people with memory disorders, transforming technology into a tangible tool to improve daily life. The project proves that AI can be taught at an early age, fostering STEM skills and ethical awareness.

View the project poster and the project video by the team from Vittorio Veneto, Italy.

© Andreas Mnich

Age group: 9–12 years

Required materials:

- Home design magazines to explore the layout of domestic spaces and furniture

- Common household objects (towels, plates, toothbrushes, pillows) to connect items with their respective environments

- Hardware: tablets and/or computers

- Software: Scratch 3.0, Machine Learning for Kids for AI training

In this PDF file, you can find an overview of the project phases and the respective activities, required materials and student output.

The project was inspired by the daily challenges faced by people with memory disorders, such as elderly individuals with Alzheimer’s or other forms of dementia. The goal was to develop a technological solution that helps them recognize domestic environments and understand the function of each space through artificial intelligence. The class embarked on an interdisciplinary journey combining literature, neuroscience, biomedical engineering, and programming, allowing fifth-grade students to explore AI’s potential in a hands-on and meaningful way.

Project phases

- Memory, remembrance, and technology: The journey began with a reflection on memory, inspired by Marcel Proust’s famous Madeleine passage in À la recherche du temps perdu. Students discussed how memories are linked to sensory experiences and what happens when memory deteriorates. The class invited a social worker specializing in cognitive disorders, who explained how dementia patients perceive reality. Through simulations and real-life examples, students gained insight into the disorientation these individuals experience and how clear reference points can improve their quality of life.

- Artificial intelligence and decision trees: To understand how AI processes data, we collaborated with a biomedical engineer, who introduced the concept of decision trees. Students engaged in an unplugged activity called "Where are the keys?", simulating an object detection system. They worked in groups to create decision-making schemes that allowed an AI to find a missing object based on location, colour, shape, and proximity to other items. This exercise demonstrated the complexity of AI recognition and the need for proper training datasets.

- Unplugged activity on object detection, tracing, and retrieval: Before transitioning to programming, students participated in an unplugged activity to understand how AI can identify, track, and retrieve objects (see "Unplugged activity" for details)

- Creating the AI home assistant: After understanding machine learning mechanisms, students designed an AI assistant capable of recognizing domestic environments and helping individuals navigate their homes (see "Creating the AI assistant" for details)

- Object Detection (Recognizing objects): Students created a grid in the classroom and placed various everyday objects within it. Each student had a specific task: to find a given object without knowing its exact position, relying only on descriptions provided by others. This helped them understand how AI recognizes objects by analyzing patterns and visual characteristics.

- Object Tracing (Tracking objects: After identifying an object, students had to track its movement through different environments. Some students were assigned to move or hide the object, while others had to record its movement, simulating how AI tracks moving objects.

- Object Retrieval (Recovering objects): In the final phase, students were given a clue about where an object had been left. To locate it, they had to use reasoning based on the tracking data collected earlier.

This introduced the concept of pattern recognition and how AI retrieves information from incomplete datasets.

This activity clarified how object recognition systems work, helping students develop a tangible understanding of how AI processes images for detection and classification.

After understanding machine learning mechanisms, students designed an AI assistant capable of recognizing domestic environments and helping individuals navigate their homes.

1. Defining environments and objects: Students classified key home spaces.

- Bathroom → Objects: towels, toothbrush, soap

- Kitchen → Objects: plates, glasses, food

- Bedroom → Objects: pillows, blankets, lamp

They then mapped possible actions (e.g., "In the bathroom, you can wash your hands").

2. Training the AI model: Using Machine Learning for Kids, students uploaded images of rooms and objects. The model was trained to recognize spaces with high accuracy.

3. Building the interface in Scratch 3.0: Students programmed an interactive system in Scratch 3.0. The script

- captures an image from the user,

- uses the AI model for classification,

- provides a voice/text message explaining the recognised environment,

- suggests actions (e.g., "Here, you can wash your hands").

4. Testing and improvements: Students tested the system with real images and improved the dataset for better accuracy.

See4Me: Assisting visually impaired people in navigating everyday life

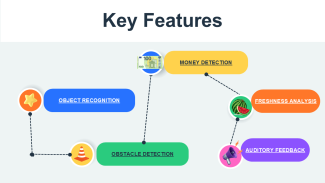

Students develop an intelligent guidance system to reduce the challenges faced by visually impaired individuals in their daily lives. The system provides auditory feedback by recognising objects through a Raspberry Pi 5 and an AI-supported camera, assisting the user with many functions such as product recognition in the market, money verification, obstacle detection, and fruit-vegetable freshness analysis. It does not require internet and can work anywhere. Thus, visually impaired individuals can move independently and safely, and meet their daily needs more easily.

View the project poster and the project video by the team from Atatürk Mesleki ve Teknik Anadolu Lisesi, Türkiye

© Andreas Mnich

Age group: 16–18 years

Required materials:

- Hardware: Raspberry Pi 5, USB Camera, Bluetooth headphones or speaker

- Software: AI-supported software libraries

Visually impaired individuals face many challenges in areas ranging from shopping and walking on the street to checking money and selecting food. This project aims to enhance their independence and improve their quality of life by providing the essential information (product name and content, obstacle location, amount of money, fruit-vegetable freshness, etc.) in real time and through auditory feedback.

For this, students develop machine learning models in areas such as object recognition, text reading, and freshness analysis, and ensure that the system has a user-friendly interface.

Project components:

- Object recognition and product information reading: The USB camera connected to the Raspberry Pi 5 scans the product held by the user or the object in front of them. The image is processed and the object is classified with the help of OpenCV and similar libraries. The name of the detected object, and if it is a market product, its content or usage information, is conveyed to the user audibly.

- Money identification: The camera recognises the money held by the user. The machine learning model detects different banknotes and coins. The system audibly announces the identified amount.

- Obstacle detection and warning system: The camera continuously scans the environment. The position and distance of the obstacle are calculated. Warnings such as There is a wall in 3 steps or There is a staircase ahead are given to the user.

- Fruit/vegetable freshness analysis: Image processing techniques are used to analyse the colour and texture of fruits or vegetables. The user is informed whether they are fresh or not.

- Auditory Feedback (Text-to-Speech, TTS): All this information is converted into voice commands through TTS. It is delivered to the user via Bluetooth headphones or a speaker.

Winning project: Predictive AI saves our planet

In this sustainability-oriented project that was awarded second place at the AI Challenge final, AI is used to predict illegal fishing before it even happens, instead of just reacting when it does. The team looked at satellite pictures and vessel data to figure out where and when illegal fishing is most likely to happen. The students played an important part in each stage: figuring out the problem, doing research, coming up with ideas, and manufacturing a prototype and finally, evaluating (PRIME).

View the project poster and the project video by Sophie Cradock and Harry Hannam from Thomas Hardye School, Dorchester, UK

In the Problem Identification stage, the team realised that illegal fishing is a big problem for the ocean, damaging marine life and taking away food from communities who rely on fishing. The students spent time talking about the issue, watching videos, and looking at statistics to see how bad the problem is.

During the Research stage, the team looked into how technology like satellites and AI are already being used to track fishing activities. They focused on how AI could help not just catch illegal fishermen but also predict when and where they might fish illegally. Tools like Global Fishing Watch use satellite data and vessel trackers to keep an eye on fishing boats.

In the Idea Development stage, the students worked together to come up with a new idea. They decided to use AI that can predict when and where illegal fishing might happen, based on data like fishing patterns and sea temperatures. This way, authorities could stop the illegal fishing before it happens, which would help protect the oceans.

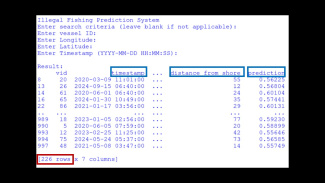

Finally, in the Manufacturing stage, they used the research to build a model that uses AI to predict illegal fishing and tested it with real data to see how well it works. They created a mock data set where it was unknown if boats were illegal or not and the AI generator (Random Forest Regressor) gave a prediction of 226/1000 being illegal, based off the training data target features. That number matched the estimates from Global Fishing Watch, which suggests that about 20% of fishing activities globally are unregulated or illegal.

They then evaluated their idea and brought in conclusion how successful it would be in the real world. With the ability to analyze large amounts of data, the AI

system would be able to inform authorities (Coast guards) when the PAI recognizes a pattern or trend in the past satellite data.

The oceans cover over 70% of the Earth, and yet they’re facing major environmental threats that endanger marine life and ecosystems. Overfishing, plastic pollution, and climate change are huge problems for the oceans. Among the most harmful practices is illegal and unregulated fishing, which is one of the biggest threats to ocean health. Illegal fishing affects marine biodiversity, disrupts ecosystems, and impacts coastal communities that depend on fishing for food and income. We need to find ways to stop illegal fishing before it starts—and that’s where the "Predictive AI saves our planet" project comes in: using predictive AI to prevent illegal fishing before it happens.

Illegal fishing is a global problem, and its consequences are great. Around 20% of the world’s catch is from illegal fishing, which harms marine species, like sharks and tuna, that are already close to extinction. What’s worse is that overfishing isn’t just about the animals; it affects people. Coastal communities that rely on fishing as their primary food source and economic activity are hurt by overexploitation. Although governments and organizations are working on it, traditional methods for monitoring and enforcement are often too slow to prevent damage. This is where predictive AI could make a huge difference.

The research and project development continues, and Sophie and Harry have looked into additional methods that would improve monitoring and prevention in a cost-efficient and practical way, such as aerial drones, underwater acoustics, synthetic data analysis and others. This poster presents details on their methodology and further prospects.

Share this page